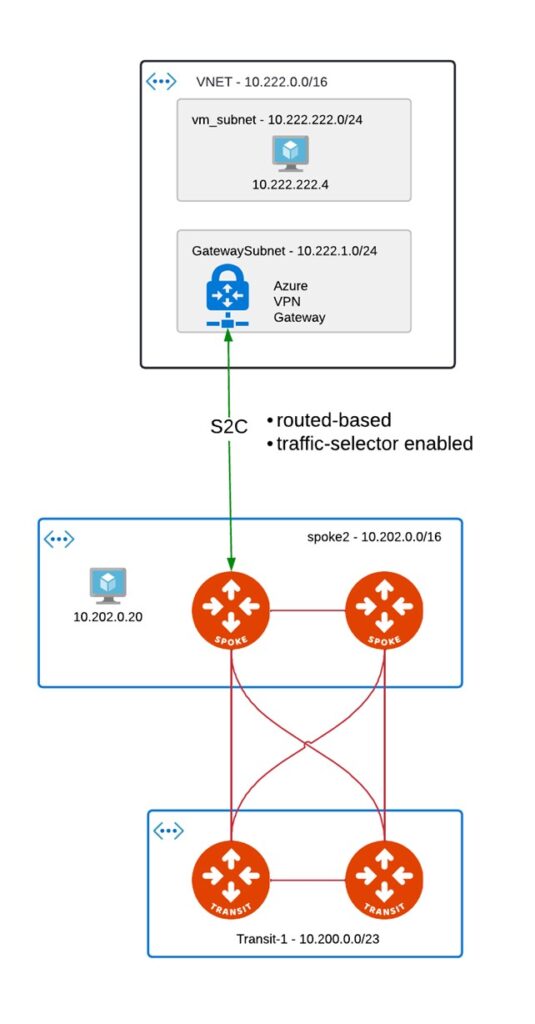

Recently one of my customer asked me how we can improve his current VPN setup to 3rd party environment for maintenance window. On customer’s side we have Aviatrix spoke GW with HA option enabled. 3rd party is just AZURE VNG.

Before we jump into optimization, let’s talk about VPN itself (from Aviatrix point of view). I needed to concrete my knowledge and let me share with you a few TIPs while taking that path.

Few facts about VPN

VPN is a broad topic and there are many great articles about it – I assume you have basic knowledge so apologies if I don’t explain everything in details. It is also not about encryption algorithms, IKE negotiations and IPSEC itself. It is more about they way you do it on Aviatrix Platform.

There are 2 Types of VPNs:

- Policy-Based

- Route-Based

Here is very good Comparison between Policy Based vs Route Based VPN done by Design Expert – Shahzad Ali of Aviatrix (worth reading as there is no point in writing about it one more time) – LINK to AVIATRIX community

There are 2 places to configure this on Aviatrix Controller:

Multi-Cloud Transit -> Setup -> external connections

- Only Route-Based

- Supports both BGP and static Route-selector (similar concept to VNG route-based with this knob enabled – “use policy based traffic selector”. Here is very good explanation how VNG works for that https://github.com/MicrosoftDocs/azure-docs/blob/main/articles/vpn-gateway/vpn-gateway-connect-multiple-policybased-rm-ps.md

- If Aviatrix HA GW is deployed, both will actively try to establish connections to the same remote-GW

Site2Cloud -> Setup

- Supports Policy-Based and Route-Based

- For Route-Based VPN – VTI tunnel is created

- doesn’t support BGP

- Gives you an option to establish backup tunnel from HAGW

- Supports MAPPED NAT – very useful feature when we have overlapping IPs on both ends. That allows to establish NAT in a subnet-to-subnet manner – simplifying configuration of it.

- CIDR of remote-subnet is not automatically propagated into Active-Mesh (towards Transits – if tunnel terminated on SPOKE). We need to use “Customize Spoke Advertised VPC CIDRs” to inject it into Aviatrix infrastructure or use “Auto Advertise Spoke Site2Cloud CIDRs” (mapped tunnels only!)

Let’s get back to customer’s case:

Initial setup

Problem here is that there is one VPN tunnel only and no HA at all. Assuming Azure handles failover scenarios in its own way (this is just A/S deployment with PIP move to 2nd instance in case of failover). Because it’s traffic-selector being used for tunnel we can’t establish 2nd backup tunnel from Aviatrix HA GW, and here comes the problem – what do we do for maintenance of Primary GW (upgrade lets say)? We loose connectivity.

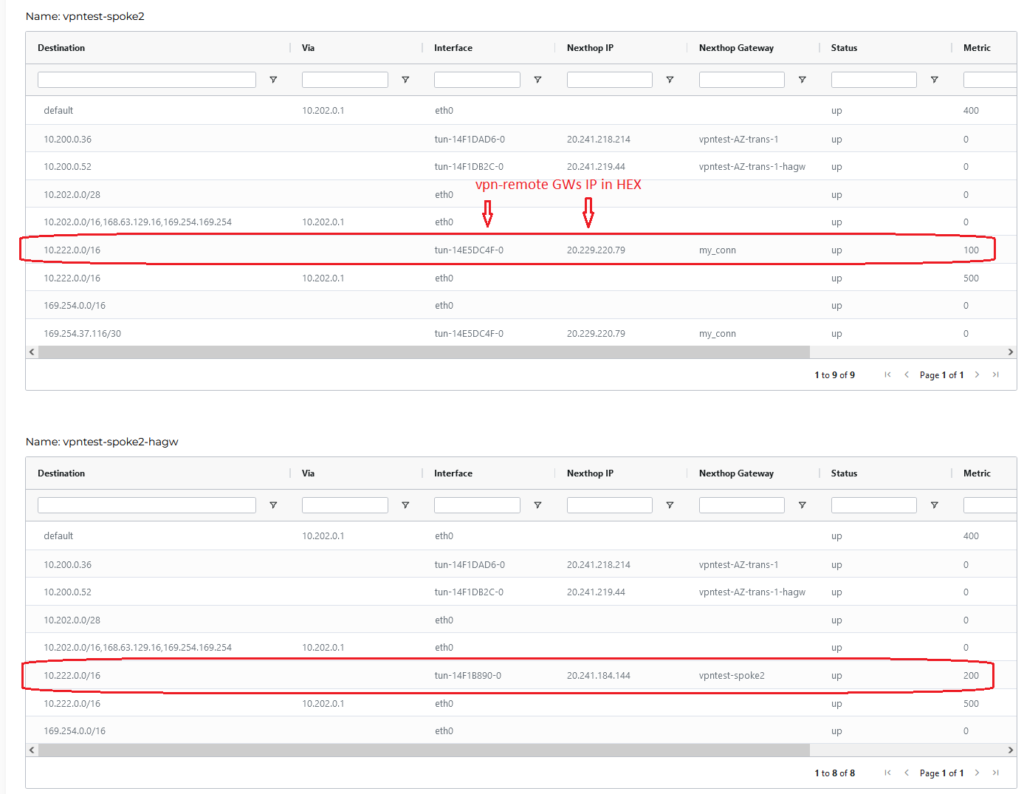

Let’s take a look at Spoke’s GW route tables:

As there is only 1 tunnel from primary one we see the next hop for 10.222.0.0/16 VTI interface (tun-14E5DC4F-0) with metric 100. HA GW on the other hand is pointing to primary spoke GW – makes sense right?

Let’s try to make it better…

Option 1 - Single IP HA

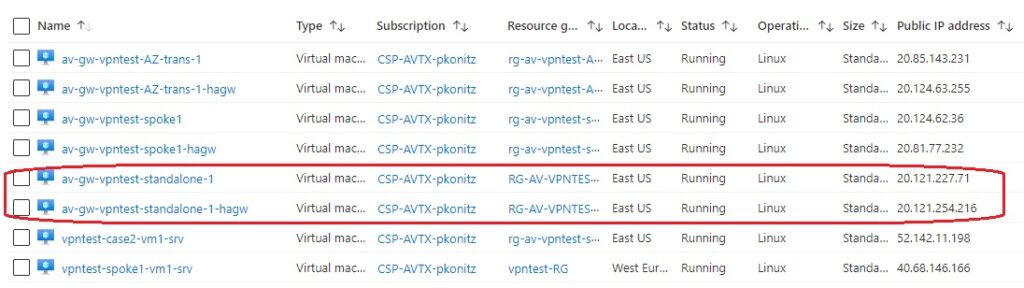

This feature was introduced some time ago (Controller version of 6.4) but is not being used that often what I can see. The idea behind it is to migrate Public IP of one of the GW to standby one and re-establish the VPN. Simple right? That feature requires standalone GW and HAGW (Transit and spoke are not supported as it’s harder to migrate PIP having active/mesh enabled on them). Interesting use case for this one is if you are migrating VPN from on-PREM world where you have HA but based on FHRP (VRRP or HSRP) and actually have only 1 active tunnel there. Here with both Aviatrix GWs we are simulating such environment.

Key things to remember:

- Route-based & Policy-based IPSEC are supported

- Mapped and Unmapped connection types are supported

- Only PUPLIC IP address is supported

- Only for standalone GWs (deployed in HA mode)

- AWS / AZURE support only

- No BGP support

This is a standalone GW so workflow for deploying HAGW is slightly different. You need to go to

GATEWAY -> EDIT -> Gateway for High Availability Peering

Or with TF by just specifying 2 additional attributes (peering_ha_subnet, peering_ha_gw_size)

resource “aviatrix_gateway” “standalone_1” {

cloud_type = 8

account_name = var.avx_ctrl_account_azure

gw_name = “${local.env_prefix}-standalone-1″

vpc_id = module.mc_spoke_1.vpc.vpc_id

vpc_reg = var.azure_region

gw_size = “Standard_B1ms”

subnet = module.mc_spoke_1.vpc.public_subnets[0].cidr

zone = “az-1”

peering_ha_subnet = module.mc_spoke_1.vpc.public_subnets[0].cidr

peering_ha_gw_size = “Standard_B1ms”

}

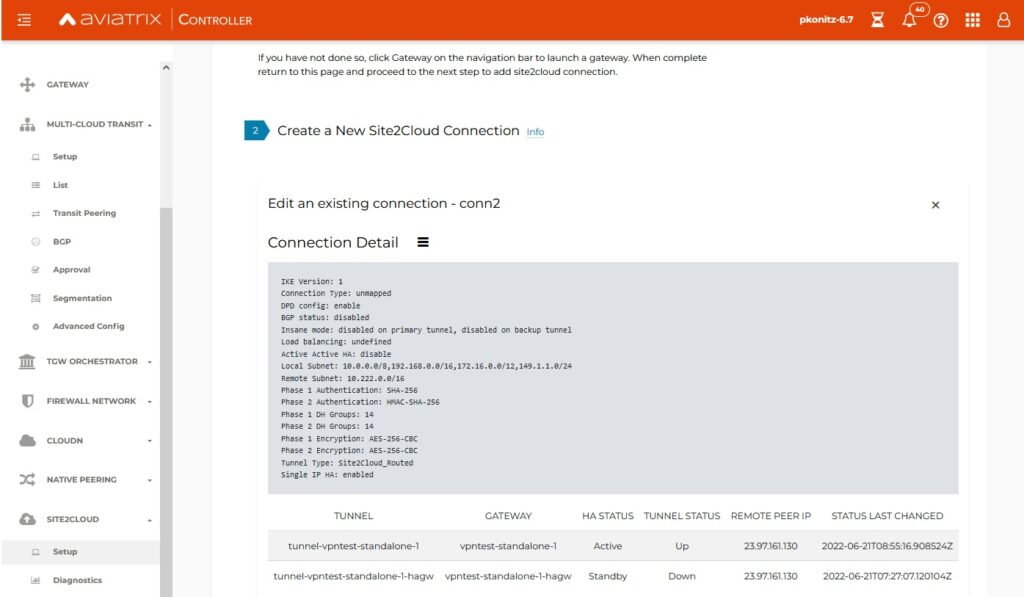

When having HAGW we just need to create new Site2Cloud connection with the following attributes specified:

resource “aviatrix_site2cloud” “site2cloud_1” {

vpc_id = “vpntest-spoke1:rg-av-vpntest-spoke1-308843:ecae61e1-f8df-40fc-a0cb-b2160528d87b”

connection_name = “conn2”

connection_type = “unmapped”

remote_gateway_type = “generic”

tunnel_type = “route”

primary_cloud_gateway_name = “vpntest-standalone-1”

remote_gateway_ip = azurerm_public_ip.VNG-PIP.ip_address

ha_enabled = true

enable_single_ip_ha = true

private_route_encryption = false

remote_subnet_cidr = “10.222.0.0/16”

local_subnet_cidr = “10.0.0.0/8, 192.168.0.0/16, 172.16.0.0/12, 149.1.1.0/24”

backup_gateway_name = “vpntest-standalone-1-hagw”

backup_remote_gateway_ip = azurerm_public_ip.VNG-PIP.ip_address

custom_algorithms = false

pre_shared_key = “secret$123”

backup_pre_shared_key = “secret$123”

enable_dead_peer_detection = true

enable_event_triggered_ha = true

enable_ikev2 = false

}

As soon as tunnel goes up Controller is smart enough to program all UDRs in this spoke VNET so 10.222.0.0/16 will appear.

Of course normally we want this prefix to be accessible from the rest of the spokes. To make it happen we need to inject it into transit from the spoke.

TESTING

Lets check how long does it take to failover. Initial state is that only 1 GW has tunnel being UP

And both GWs are running

Keep in mind that you will see public IPs swapped but that’s not all what is happening behind the scenes. Controller does UDR programming for you and IPSEC tunnel still needs to come up.

Tunning

There are a few options that have impact on the time it takes to failover. In my case these were the following:

Conclusion

This option is quite nice but would not meet customer’s goal – maintenance purpose. With some tuning of parameters above I was able to lower the time needed for failover to 150s. It is not a matter of detection it’s slowness of AZURE’s API itself. For AWS I was surprised to see time around 30s which is acceptable in my opinion. In Azure I think we could upgrade GW faster than doing failover

It serves its main purpose though – HA. Second GW is deployed in a different AZ so we have our resiliency there and remote ends points only to single IP which is what we wanted.

Follow-up – coming soon

next option will be BGP with 2 different scenarios (Active / Passive vs Active / Active – with VNG)

Pingback: Providing Scalability and Availability for Site-2-Cloud VPN with Overlapping IP addresses – RTrentin's world