Previously we provided HA with dedicated option – SINGLE IP HA. But we want to improve the initial setup

I would say this is my favourite option as its simple and neat. Both Aviatrix GWs are establishing tunnels and both can forward traffic towards destination (increasing our available throughput with ECMP). We are using VNG on remote end that give us 2 options there:

- VNG Active / Passive

- VNG Active / Active

There is no additional cost to deploying VNG in A/A – so I would go for that in a 1st place but let’s consider Active/Passive either way. Important thing to know is that BGP is supported on all Azure VPN Gateway SKUs except Basic SKU.

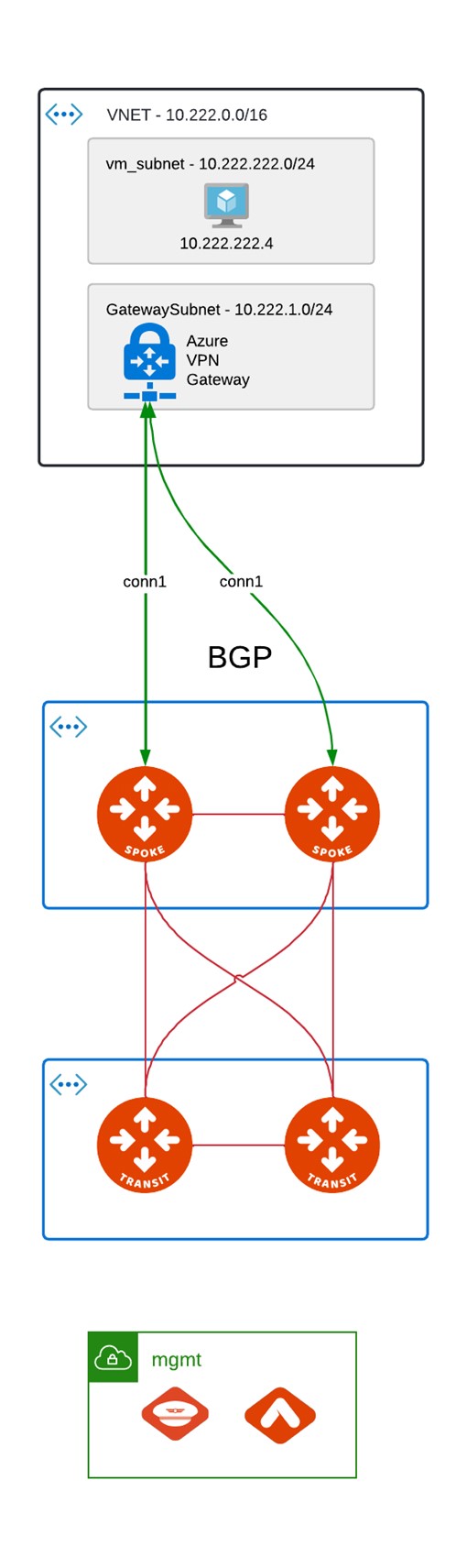

VNG - Active / Passive

This is our desired setup:

- 2 active tunnels from 2 Aviatrix spoke GWs.

- single VNG being active at time.

I’ve been told that failover time for VNG is in seconds so that might be a good option but …

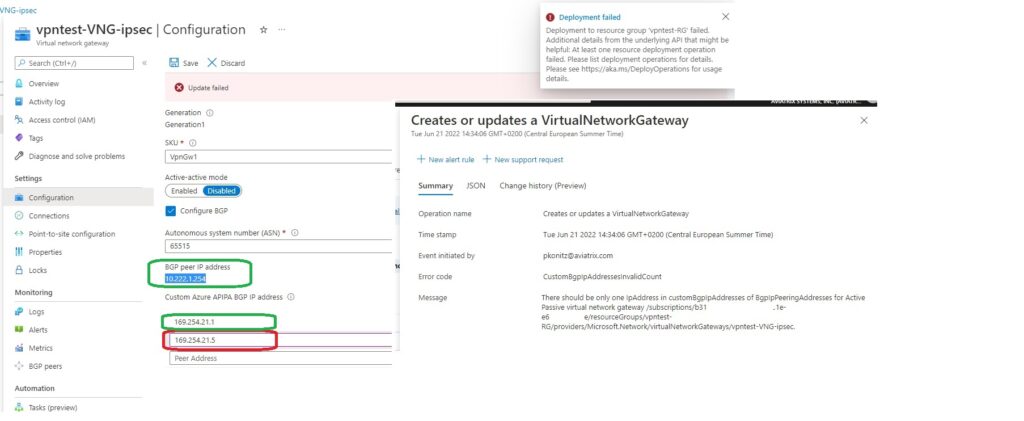

Aviatrix GW for BGP setup requires /30 for peering. So Primary GW uses 1 subnet for BGP and HA GW requires different subnet /30 for its own BGP peering. Here comes the problem – VNG A/P allows only single APIPA address allocation.

How we can address that limitation?

Why not using APIPA addresses for VNG-AVIATRIX_primary and other subnet for VNG-AVIATRIX_ha connection.

We know that VNG (A/P) allows only 2 addresses to be assigned for BGP termination.

- One IP from GatewaySubnet (automatically assigned)

- One APIPA address (when trying to add more APIPA there we get error)

Lets do the trick and try defining our connection with these 2 available addresses.

On Aviatrix Side when defining external connection we have the following:

254.21.1/30 – VNG APIPA

254.21.2/30 – Aviatrix_primary VTI tunnel

222.1.254/30 – real IP of BGP on VNG

222.1.253/30 – Aviatrix_ha VTI tunnel

resource “aviatrix_transit_external_device_conn” “conn2” {

vpc_id = module.mc_spoke_1.spoke_gateway.vpc_id

connection_name = “conn2”

gw_name = module.mc_spoke_1.spoke_gateway.gw_name

connection_type = “bgp”

tunnel_protocol = “IPsec”

bgp_local_as_num = module.mc_spoke_1.spoke_gateway.local_as_number

bgp_remote_as_num = “65515”

remote_gateway_ip = azurerm_public_ip.VNG-PIP.ip_address

remote_tunnel_cidr = “169.254.21.1/30,10.222.1.254/30”

local_tunnel_cidr = “169.254.21.2/30,10.222.1.253/30”

custom_algorithms = false

ha_enabled = false

enable_ikev2 = true

pre_shared_key = “secret$123”

}

On Azure side of course we need to define Local Network Gateway.

But Aviatrix has different IP for tunnel interface (being used of BGP termination). That is true however it will respond to BGP request with IP it gets packet for (in our case 10.201.0.5 – which is eth0 of Aviatrix HAGW)

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.201.0.5 netmask 255.255.255.240 broadcast 10.201.0.15

inet6 fe80::222:48ff:fe2f:1a4f prefixlen 64 scopeid 0x20

…

tun-14E5F1DF-0: flags=209<UP,POINTOPOINT,RUNNING,NOARP> mtu 1436

inet 10.222.1.253 netmask 255.255.255.252 destination 10.222.1.253

inet6 fe80::5efe:ac9:5 prefixlen 64 scopeid 0x20

…

Very important thing:

When APIPA addresses are used on Azure VPN gateways, the gateways do not initiate BGP peering sessions with APIPA source IP addresses. The on-premises VPN device must initiate BGP peering connections. – Azure doc

Only because of that all is working fine. Primary Aviatrix GW is initiating BGP towards APIPA address of VNG, but for Aviatrix HAGW it must be VNG initiating traffic towards real IP of GW itself (eth0 – 10.201.0.5). We are not able to define this for tunnel interface on Aviatrix Connection. As mentioned before response to that one will come from the interface packet which was destined to (again eth0).

Results…

Final test – traffic is also going through HAGW

Conclusion

With that simple trick we were able to make 2 tunnels UP from VNG active / passive towards Aviatrix deployed in HA manner.

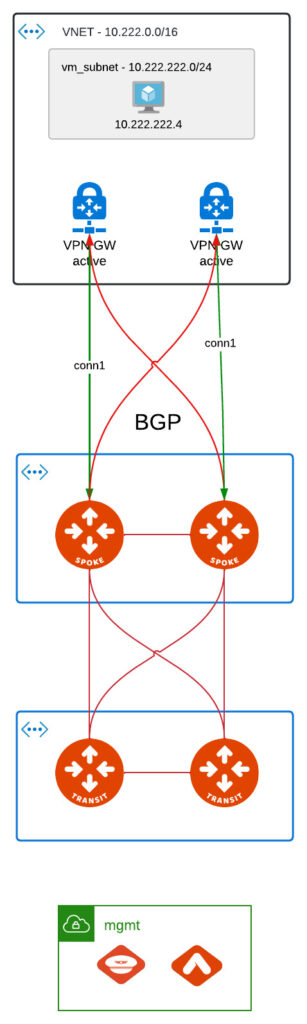

VNG - Active / Active

That setup gives more flexibility in regard to tunnel addressing. We have 2 active VNG + there is one enhancement to this:

General availability: Multiple custom BGP APIPA addresses for active VPN gateways

Here we can also achieve HA with 2 tunnels being UP. We are not able to bring up more because of:

- Azure LNG limitation – when defining LNG you can assign only single BGP peer address

- Aviatrix way of defining connections with /30 subnets for IPSEC tunnel

So to make it work we can use old trick of dummy addresses for VTI. Lets use 1.1.1.1 and 1.1.2.1 for that. Terraform code could look like this:

Final Conclusion

BGP with ECMP is definitely a better option to provide such resiliency. Not only because we have 2 tunnels up, doubled the throughput but also because while doing maintenance IMAGE upgrade lets say we are sending BGP notification to the VNG to switchover the path. The desired goal is met – customer is happy.

Pingback: Providing Scalability and Availability for Site-2-Cloud VPN with Overlapping IP addresses – RTrentin's world