Another real case scenario and that is the reason why I decided to write about it in this post. I hope you will enjoy it.

What do we have to start with?

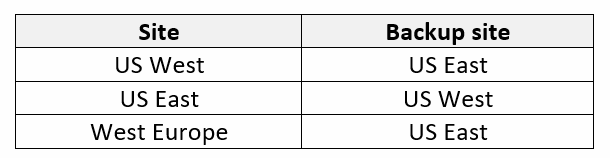

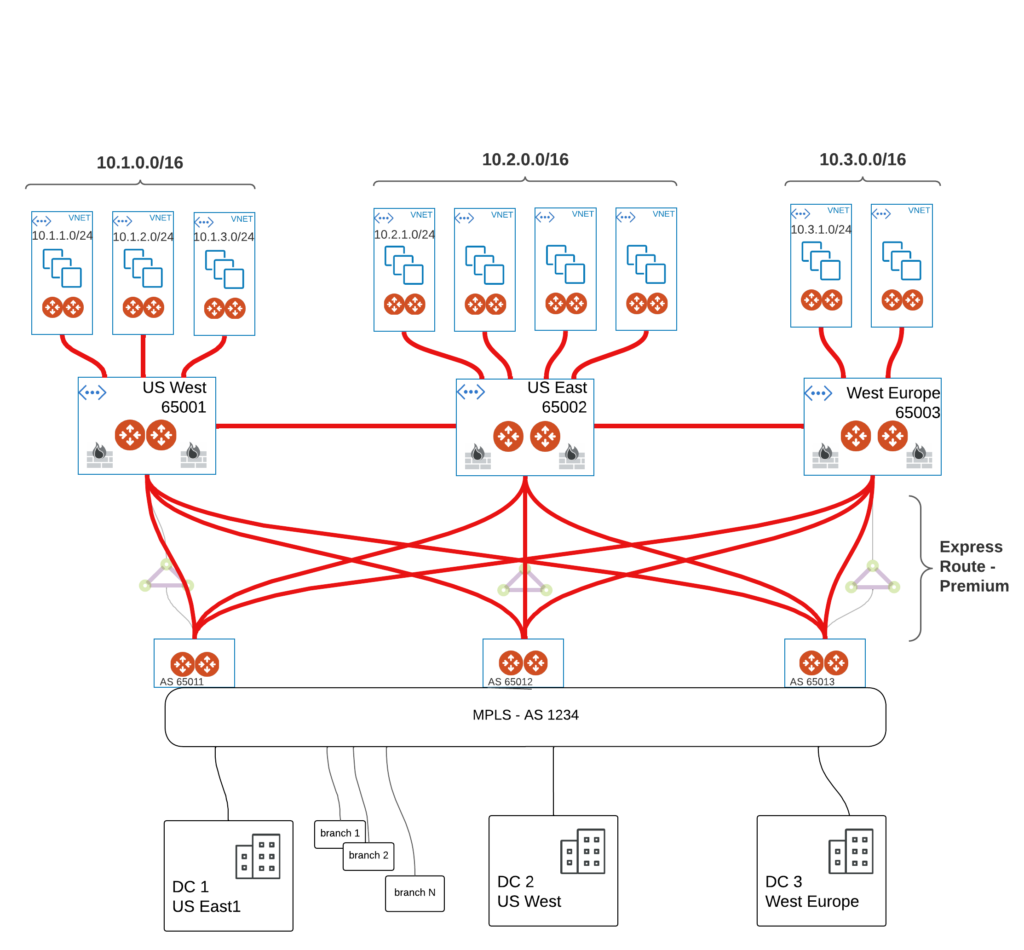

Customer has 3 regions and each one has its own ExR circuit towards on-PREM. All of his on-Prem locations are also using MPLS but unfortunately managed by 3rd party. Typical situation where you outsource the administrative burden of MPLS backbone mainly because of the cost. Fully understandable approach but with one potential drawback there. Service providers love standards and asking them for any custom change is like hitting the wall with your head (unless you pay them that much that you are on the list of the most valuable customers but even then it’s not always that easy and requires many escalations).

In this case we already have AVIATRIX infrastructure deployed in AZURE (transit gateways in each region and some spokes accordingly). I hope you are already familiar with Firenet insertion feature on the Transit Gateway. Firenet feature makes it really easy – deploying Next-Gen FW of 3rd party (i.e. PALO, Forti, Checkpoints) in the Transit GW and redirect all necessary traffic towards it.

Requirments

- Each regional Transit GW has to have Firenet deployed (the main reason is to make sure that every single spoke attached to individual transit is going under inspection when talking to other spokes)

- Each on-Prem location needs to have a backup entry point towards cloud

- Latency should be the smallest possible

- High bandwidth is required – 10Gbps

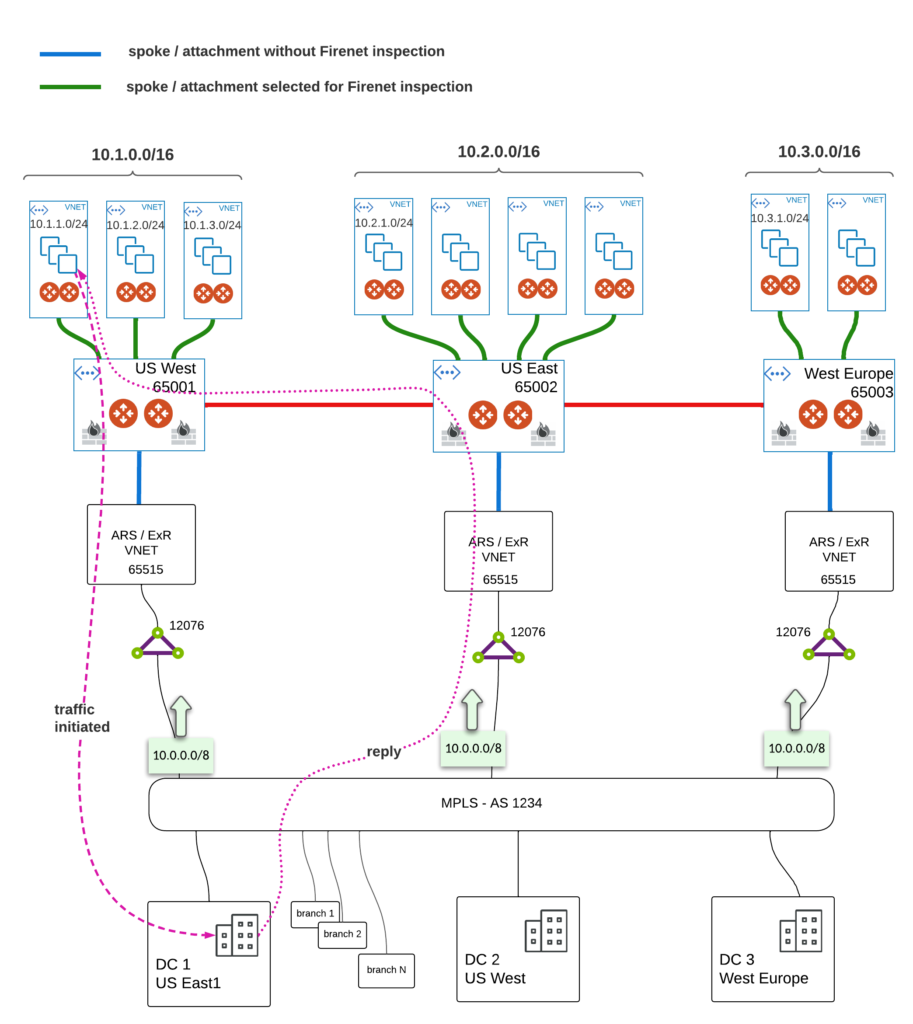

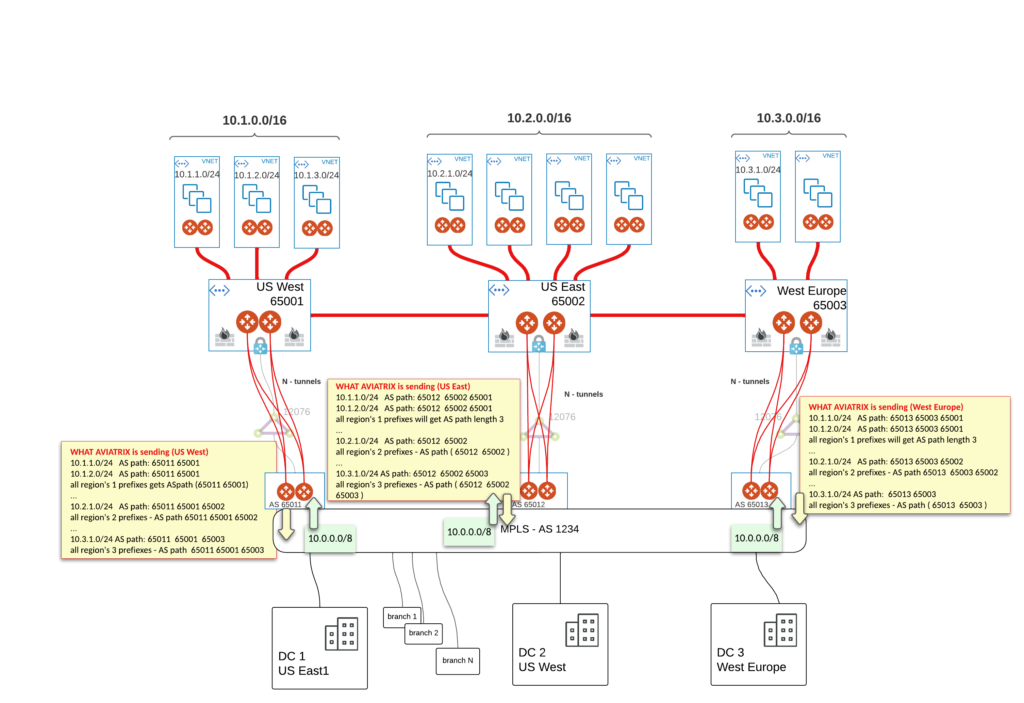

The diagram below shows the topology and the scope of responsibilities

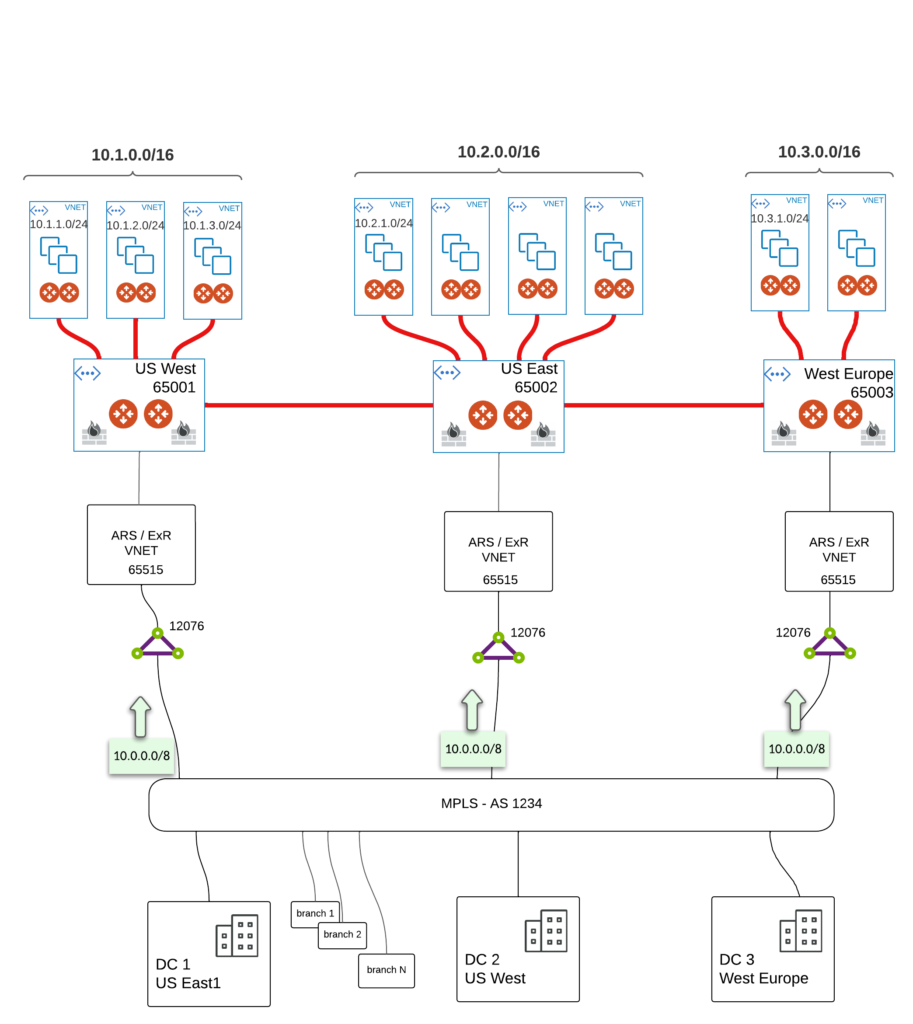

As you can see MPLS is just a FLAT network. From cloud point of view (Aviatrix infrastructure) MPLS cloud looks like a single router with 3 NICs. All of the on-Prem prefixes are aggregated as single 10.0.0.0/8 with single AS number in the path (i.e. 1234). All looks simple and straightforward what could went wrong right?

Basically our cloud infrastructure was flattened by ExR.

That might cause a lot of problems when it comes to ASYMMETRIC routing (keep in mind we have FW in each transit – in that case if return traffic doesn’t take initiator’s path we got drop as FW in other regions didn’t see SYN).

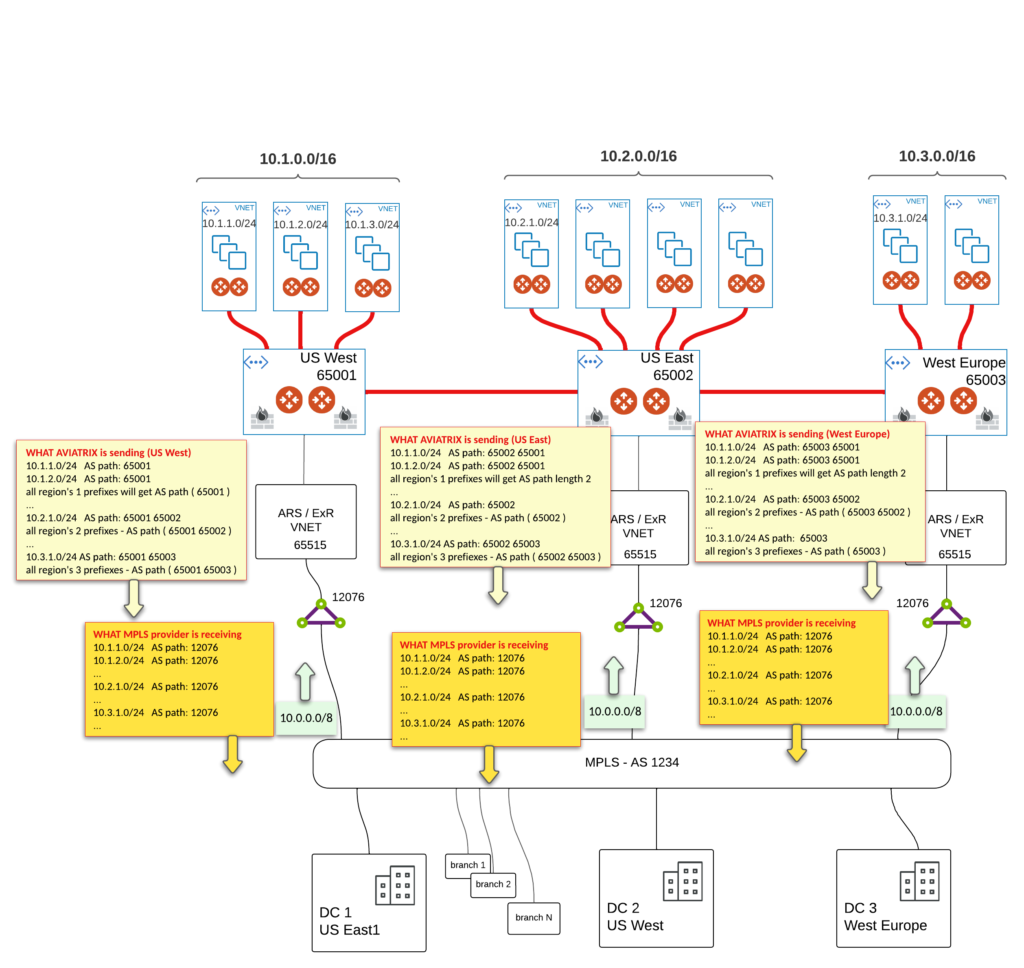

SOLUTION 1 - no Firewall inspection

That’s the easiest way of making the traffic pass

Regardless ExR entry point, MPLS provider sends reply to one of the Transit Gateway and if it is:

- Transit Gateway where DST spoke is attached to – that means the path was symmetrical

- Transit Gateway in different region – traffic is forwarded directly to TRANSIT PEERING which then lands on Transit Gateway of origin

Our main requirement is still accomplished – All spokes are inspected.

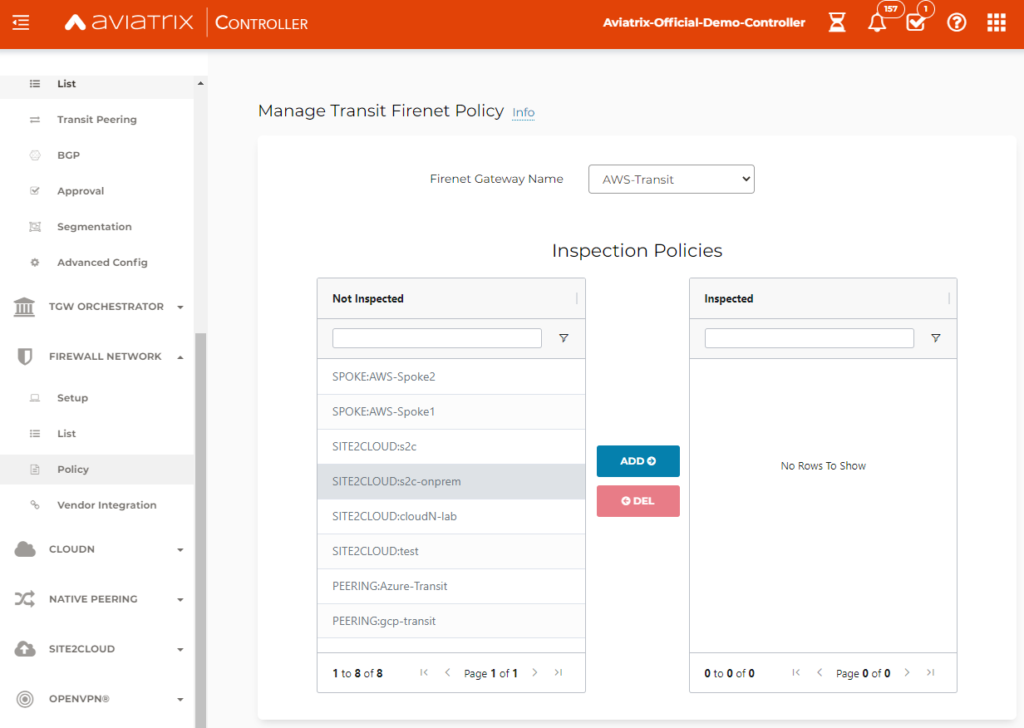

The policy where you have option to select what is meant for inspection is as below

(this is just an example not for above scenario so names are irrelevant)

That seems to be the simplest and easiest way of doing it. What do we accomplish that way:

-

High throughput of ExR

-

All spokes inspected

-

Dynamic routing in place

Potential high latency if return traffic comes from another region ☹

Risk of reaching the limit of 200 prefixes which is the max Route Server can push into ExR. That might come if our cloud infrastructure grows which we hope for right?

SOLUTION 2 - overlay with BGP

Let’s not relay on ExR route exchange to overcome 2 challenges:

- Prefix limits (200)

- Stripping private AS numbers

To do that we need to built overlay and terminate BGP between Transit GWs and termination point on MPLS edge. There are many options and vendors to accomplish that. We just need to support IPSEC and BGP. There is however one requirement we need to keep in mind – HIGH THROUGHPUT. So it wouldn’t be good idea to limit ourselves to just 1-2 IPSEC tunnels as on cloud side that gives us 2-4 Gbps. Aviatrix has its own technology – HPE (high performance encryption). In short – when using Aviatrix EDGE device we are capable of building multiple IPSEC tunnels and treat it as one big pipe.

By stating N-tunnels on the diagram below I mean 16, 32 …

Of course we want high resiliency so we put x2 EDGEs in each one of the location. We assign new AS number and establish BGP between EDGE and MPLS provider (or DC termination point).

That allows us to send BGP update with original AS PATH length. Looking deeper we see one extra value – cost savings. We don’t need Route Server anymore and we can put ExR-gateway directly in Transit GW’s VNET.

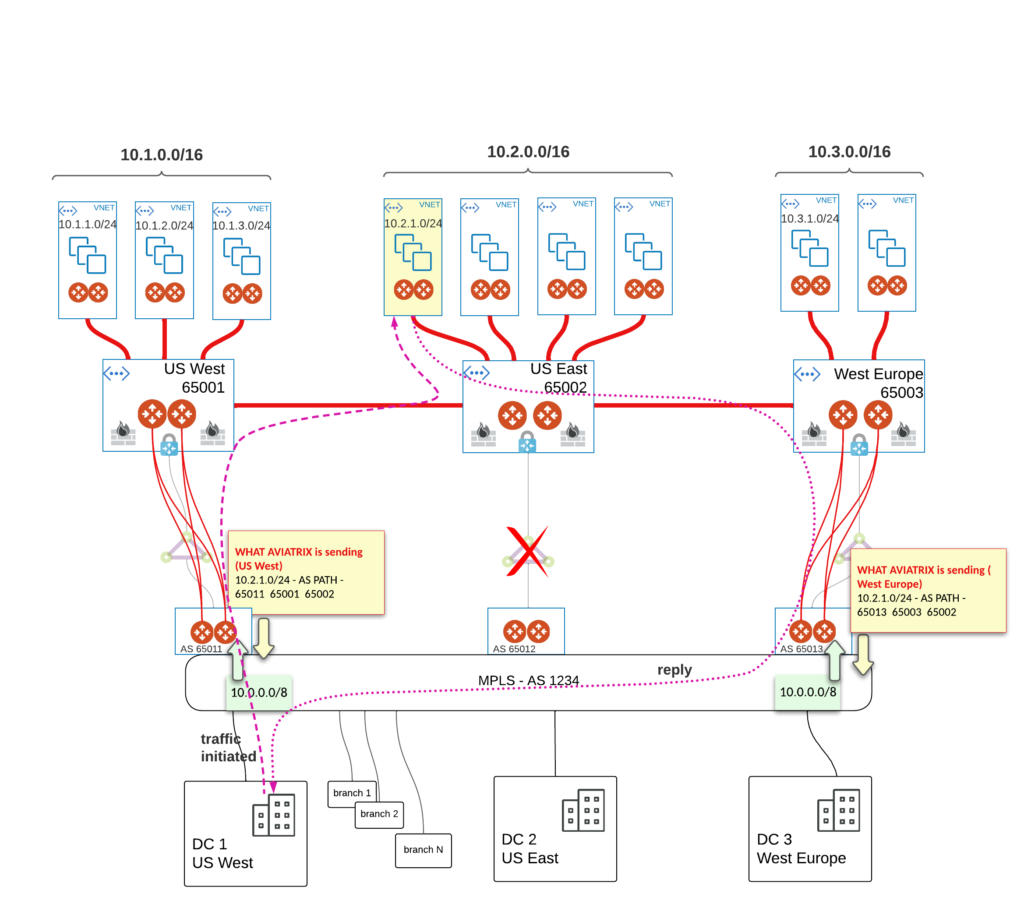

Challenge – one of the circuit / POP goes down.

Seems unlikely right? But let’s consider such scenario where the whole POP in one of the region goes down.

We end up with potential asymmetric path which we can deal with in terms of FW. Latency might hit us again though. Let’s take a look on just one spoke in US EAST region. That’s what EDGEs advertise into MPLS – AS PATH is equal 3 in both US WEST and WEST EUROPE.

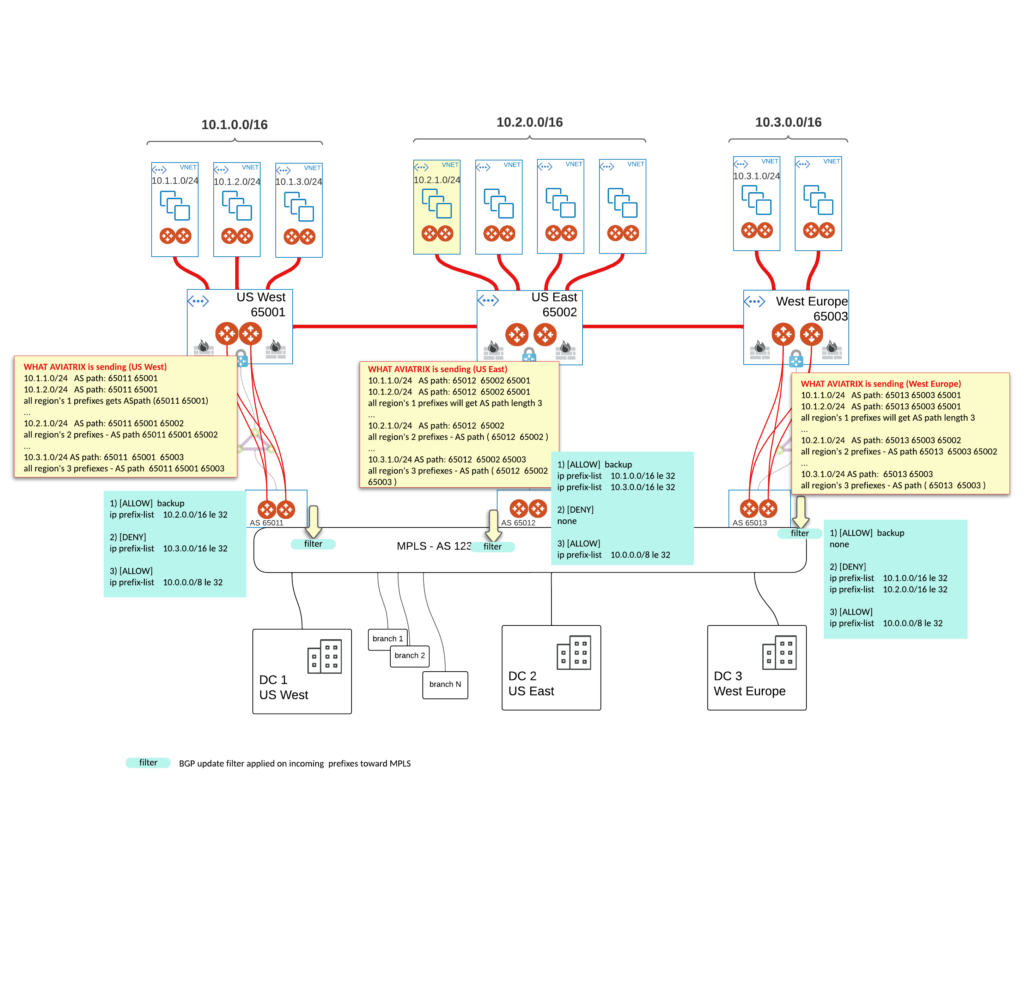

To address that we could create REGIONs priorities and select just one backup path for each. To make it right we could measure latency on both MPLS layer between all POPs and CLOUD layer between all Transit GWs. Then we should be able to estimate which region should act as backup for others.

For our simulated failure in US EAST it sounds natural that traffic should not take EUROPE’s path. Theoretically it could be like this:

Here is how filters might look like.

SOLUTION 3 – using public AS numbers

We know ExR strips off private AS number but if you own public ones you could potentially use it as AS path length would be correct. You could theoretically hijacks someone’s public AS numbers (NOT RECOMMENDED) and still accomplish that but what MPLS provider would do in that case? That is a risk I wouldn’t take.

Just consideration - multi peer EDGE

Let’s assume MPLS provider has its own logic for sending traffic to the cloud and it is not fully FLAT MPLS. We might assume that every DC takes it’s closest POP to get into all Azure prefixes. We would then end up again with asymmetric path over transits. There is yet one more way to overcome it which might be handy if we need to select all attachments / connections for FW inspection.

There is an option to attach EDGE to multiple Transit GWs. We need Express Route Premium to make that happen but that would help us in such situation. Of course Latency is still our enemy here and that might serve better in single geopolitical region.

The better use case for multi peer EDGE is if you have 1 Transit GW in AZURE and another one in AWS / GCP and you can attach it to all Transit GWs providing redundant path for Transit Peerings over your DC.

Conclusion

When it comes to big cloud infrastructures with multiple entry points I believe sooner or later we need to move to overlay concept and propagate BGP properly into on-Prem layer. If all POPs are in one geopolitical region, latency might not be that problematic and we could use more ideas there, maybe even simplify what I proposed above.

Awesome article, Przemek! Thank you.

Just one question: in Solution 2, where are the Edges hosted? Seeing how with solution 1 ExR plugs directly into MPLS, how do we insert the Edges into what seems to be a managed infrastructure?

Thank you!

hi Nick,

I’m glad you liked it.

For Solution 2 – it doesn’t matter where you put them. It can even be your own on-PREM DC. It is just a connectivity which needs to be provided from Transit Gw VNET to Edges via ExR. In our case we’ve been testing 3rd party MPLS providers infrastructure/environment. They have ESXi env there and they put EDGE just as another VM in their POP location.

Great stuff Przemek, I’ve certainly picked up a few pointers 🙂